Software Development at 800 Words Per Minute

(updated )

Imagine reading code not by looking at the screen, but by listening to interface elements, variables, language constructs and operators read at 800 words per minute (WPM) - over 5 times faster than normal speech. It sounds like gibberish to most, but to me, it’s as natural as you reading this sentence.

If you’ve ever wondered how someone codes without seeing the screen, or if you’re curious about accessibility in the software development process, this post is for you.

Table of Contents

- What Is a Screen Reader?

- Do You Really Understand What It’s Saying?

- What Text-to-Speech Voice Do You Use?

- How Do Screen Readers Work?

- What Applications Are Compatible with Screen Readers?

- Why Develop on Windows?

- What IDE Do You Use?

- What Other Tools Do You Use?

- Infrastructure as Code

- The Fragility of the GUI

- Do You Work on Frontend Code?

- How Do You Read Images?

- How Do You Draw Diagrams?

- How Do You Read Screen Shares?

- Do You Do Pair Programming?

- The Single-Channel Challenge

- Learning at 800 WPM

- Conclusion

What Is a Screen Reader?

A screen reader is software that converts content on a screen into synthesized speech or braille output. Being visually impaired, using a screen reader is a necessity for me to be productive on a computer, as it reads out what you would normally see on a display.

Screen readers are built into most operating systems: Narrator on Windows, VoiceOver on Apple platforms, and Talkback on Android.

I use NVDA (NonVisual Desktop Access), a free and open-source screen reader on Windows. Here is a video demonstrating use of NVDA with Visual Studio to write a simple application. I don’t know the speech rate of the screen reader in that video, so I’ll estimate it at somewhere between 300 to 400 WPM.

The above video demonstrates some of the things that screen readers verbalize:

- The current keyboard focus. For example, the current line of text when pressing the up / down arrow keys in a text file, or the current character when pressing left / right arrow. When moving about the interface of an application, the current control is announced

- Key presses

- Events like on-screen notifications, new text being written to a terminal, autocomplete suggestions becoming available or alerts in an ARIA live region

I use the computer entirely via keyboard. Besides standard keyboard commands in the operating system or the current application, the screen reader also provides hundreds of additional keyboard commands for navigation and requesting specific information. With NVDA, pressing “h” jumps to the next heading on a web page, caps lock + f12 reads the current time, and caps lock + t reads the title of the currently focused window.

Think of a screen reader like a flashlight illuminating a small part of a dark room. It moves through content linearly—word by word, line by line, or element by element. If you copy the contents of a web page and paste it into a text editor, you’ll get a rough idea of how web pages are read out by a screen reader. There are keyboard shortcuts to rapidly move to different types of content (e.g. headings, links, form fields), but it doesn’t provide an instant “big picture” view of the screen. This is like reading a book rather than scanning a newspaper.

This constraint has shaped how I approach code. Since jumping around a file is slower than having the full context visible at a glance, I focus on understanding systems at a higher level first - mapping out the functions involved and how components interact, then drilling down into specifics. This mental caching reduces the need to move rapidly between different parts of a file or looking at multiple files simultaneously.

Do You Really Understand What It’s Saying?

The typical reaction when listening to this is shock. To most ears, synthetic speech at 800 WPM sounds like a meaningless stream of robot chatter.

Since the rate that I absorb information is limited by how fast the screen reader is speaking, developing the ability to understand it at higher speech rates is critical. Using a computer at normal human speech rates of 150 WPM is glacially slow.

Unlike human speech, a screen reader’s synthetic voice reads a word in the same way every time. This makes it possible to get used to how it speaks. At first, it requires conscious effort to understand. With years of practice, comprehension becomes automatic. This is just like learning a new language.

I adjust its speed based on cognitive load. For routine tasks like reading emails, documentation, or familiar code patterns, 800 WPM works perfectly and allows me to process information far faster than one can usually read. I’m not working to understand what the screen reader is saying, so I can focus entirely on processing the meaning of the content. However, I slow down a little when debugging complex logic or working through denser material. At that point, the limiting factor isn’t how fast I can hear the words but how quickly I can understand their meaning.

What Text-to-Speech Voice Do You Use?

The choice of voice is critical for high-speed comprehension. I have two requirements:

- Intelligibility at very high speech rates - Speech must remain clear and understandable when sped up dramatically.

- Minimal speech generation latency - Speech must be synthesized with virtually no processing delay. Additionally, the entire audio pipeline must be responsive with no perceivable delays - this is why I usually avoid Bluetooth audio.

These requirements rule out almost all available voices.

I use Eloquence, which generates speech by using formant synthesis. In formant synthesis, mathematical models that represent how the human vocal tract produces different sounds are used to generate speech. Though they tend to sound more robotic, formant synthesis is consistent. Since every sound is generated using the same mathematical model, pronunciation is always identical. This predictability makes it possible to understand speech at higher rates. Formant voices also have lower latency.

The options for high-speed text-to-speech are extremely limited. Besides Eloquence, there’s eSpeak, which is open source but sounds even more robotic. That’s it - this is a very niche use case.

While I could switch to another voice if a better one becomes available, there will be an adjustment period while I’d have to slow down to retrain myself to understand its speech patterns. After years of using Eloquence, I have become accustomed to its specific way of speaking.

In contrast, more commonly used voices use concatenative synthesis (stitching together pre-recorded speech segments) or neural synthesis (AI systems trained on human speech datasets). While these approaches can sound remarkably natural, they introduce variations in timing, pitch, and emphasis that mimic human speech. This makes them much harder to understand at higher speeds. Additionally, natural sounding voices typically have higher latency. Like working with a laggy monitor, even small delays are disruptive.

How Do Screen Readers Work?

This is where things get technical, and why the choice of GUI framework matters.

Screen readers don’t visually interpret the screen. Instead, they get information from the accessibility tree, a structure that contains information about UI elements.

Here is how a submit button <button>Submit</button> on a web page gets read:

- The browser constructs the Document Object Model (DOM) for the web page and renders the element visually as a button with a label of submit.

- The browser exposes information about this button in the accessibility tree. It is represented by a node with a button role and a “Submit” label.

- Screen readers use APIs provided by the operating system to query the accessibility tree. With this information, it reads the element’s label (“submit”) and role (button).

When something interesting happens (such as a change in focus), the appropriate accessibility event gets emitted. Screen readers listen for these events to verbalize changes.

Screen readers work in a similar way for other types of applications.

What Applications Are Compatible with Screen Readers?

An application’s compatibility with screen readers (and other programmatic means of accessing the UI) depends on how well it exposes information about its UI in the accessibility tree.

- If no information is exposed in the accessibility tree, it is unusable with a screen reader. For example, most games draw directly to the screen without exposing any accessibility information.

- Some GUI frameworks like Flutter or QT have partial support for screen readers, but bugs with how they expose certain elements in the accessibility tree means applications written in these frameworks can be difficult to use.

- Applications that use native controls provided by the operating system usually work well, since the native controls already do the heavy lifting of exposing their state in the accessibility tree.

- For websites, web applications or applications using Electron, it depends on how well web accessibility best practices are implemented. For example, implementing that submit button by using a styled div with an onclick handler means the element is only exposed as a node with the text “Submit” without any indication that it is a button.

Besides exposing state in the accessibility tree, the application should also not require the use of a mouse, since most screen reader users use the keyboard exclusively. It should be possible to navigate to every part of the application via the keyboard.

Why Develop on Windows?

Every developer asks me this question, usually with barely concealed horror. The answer is pragmatic: Windows is still the best operating system for screen reader users.

Though using a Mac is possible, I prefer not to risk doing so professionally. The built-in VoiceOver screen reader on macOS has been neglected with severe bugs left unfixed for years. Voiceover’s interaction model makes using certain types of applications like web browsers less efficient as more keystrokes are required for navigation. The accessibility APIs Apple provides are also insufficient for a third-party alternative to be developed.

Although the Orca screen reader is available on Linux, there are numerous problems with Linux accessibility. Even something as basic as getting audio output to work reliably is a challenge. I would love to be on Linux, but this isn’t practical for me right now.

Windows might not be trendy among developers, but it’s where accessibility works best. I don’t have to worry about whether I can get audio working reliably. The NVDA screen reader works on Windows and is free and open source, actively maintained, and designed by people who are screen reader users themselves.

That said, I’m not actually developing on Windows in the traditional sense. WSL2 gives me a full Linux environment where I can run Docker containers, use familiar command-line tools, and run the same scripts and tools that everyone uses. Windows is just the accessibility layer on top of my real development environment.

What IDE Do You Use?

I use VS Code. Microsoft has made accessibility a core engineering priority, treating accessibility bugs with the same urgency as bugs affecting visual rendering. The VS Code team regularly engages with screen reader users, and it shows in the experience.

Here’s what makes VS Code work for me:

- Consistent keyboard shortcuts across all features, and the ability to jump to any part of the interface by keyboard

- Excellent screen reader announcements for IntelliSense and error messages

- Use of audio cues to indicate errors or warnings on the current line

- Continued commitment to ensuring accessibility of new features and fixing accessibility regressions.

What Other Tools Do You Use?

Beyond the IDE, development involves many other tools: documentation sites, bug trackers, team communication platforms and code review systems. Ideally, these tools are accessible and work well with screen readers.

Usually, accessibility isn’t perfect and there are specific screens or features that don’t work well with a screen reader. If I’m really unlucky, the tool may be completely unusable. In these situations, I use the following strategies:

- Finding Alternatives: unless I’m working with a team-wide tool like GitLab, I’m often able to find alternatives that work better for me. For example, Postman is popular for API testing but is unusable with a screen reader. I use a combination of Curl and the Vs Code REST Client extension instead.

- Custom Userscripts: for web applications, I use Greasemonkey to inject accessibility fixes into websites. For example, I had to write a userscript that adds table semantics to GitLab’s diff viewer. Without proper table semantics, code reviews were time-consuming since I couldn’t easily navigate to the next or previous line when reviewing code. This can be a tedious process as it requires reverse engineering the application to find the minimal changes to make to its DOM to achieve the desired effect.

- API Integration: if the interface for an application is inaccessible and an API is available, I’ll write command-line tools or simple scripts to do what I need to. Large Language Models (LLMs) are helpful in these situations.

- Reporting Bugs: I report issues when I find them, but there’s a reason why this is last on the list. Accessibility bugs rarely get prioritized unless there is a strong accessibility mandate. For example, I reported the issue with tables on GitLab in 2019 and the linked Postman issue above has been open since 2017. Hence, I need to take matters into my own hands and work around such issues myself whenever I can.

Infrastructure as Code

The shift toward Infrastructure as Code (IaC) is a huge accessibility win. Instead of clicking through many AWS console screens to set up a server, I can define it in a few lines of code:

resource "aws_instance" "web_server" {

ami = "ami-0c55b159cbfafe1d0"

instance_type = "t2.micro"

tags = {

Name = "WebServer"

Environment = "Production"

}

}Text files are infinitely more accessible than graphical interfaces. I can read every configuration option, use standard development tools for editing and version control, and deploy infrastructure changes from the command line.

The Fragility of the GUI

Over the years, I’ve started seeing dependence on GUI-based tools as potential liabilities, since changes or regressions can make them less efficient for me to use, or remove my ability to use them entirely in the worst case. I’m always anxious whenever there is a UI rewrite for an application that I use, since that often regresses accessibility. For example, when Microsoft rewrote some Windows 11 screens to use a new list control, they lost support for first-letter navigation, which moves focus to the next item in a list starting with a letter by typing that letter.

Moving from clicking through screens to IaC is a great example of how I limit my exposure to the GUI.

However, I can’t avoid using the GUI entirely. I manage my risk by working with development ecosystems that don’t require use of a specific GUI-based tool. I’ve worked in Go, Python, Rust and TypeScript. For those languages, I can always fall back to the command line if necessary. For this reason, I avoid mobile development, since they require use of specific IDEs 1.

Do You Work on Frontend Code?

Creating prototypes or updating screens to match a design specification is inherently visual. Frontend will never be my strong suit, though LLMs can bridge this gap somewhat.

Though I prefer to work on backend code, I’ve been able to contribute meaningfully on the frontend as well, since there is a lot of non-visual work involved. For example, refactoring how state is tracked, communication with the backend via APIs and implementing new features with existing design components.

How Do You Read Images?

Though I can use magnification to look at the screen, it is still a last resort since this is slow for me.

Screen readers can’t interpret images, since they’re just pixels without semantic meaning. This is why alt text is crucial. Unfortunately, it is rare to find meaningful alt text in the wild and most applications don’t support setting alt text on images.

For images without meaningful alt text, I use Optical Character Recognition (OCR) to extract text content. However, this only works well for clear screenshots of text.

LLMs have been a game-changer. They work much better than OCR for more complex images like application screenshots, code or tables. LLMs can describe UI layouts, read text from complex images, and explain diagrams or charts. I can also prompt for the specific information I need. For example, “What error is highlighted in red in this screenshot?” or “Transcribe the code in this screenshot exactly without any other commentary”.

I have to be careful about hallucinations and avoid uploading sensitive information. Even with these limitations, AI-powered image descriptions are incredibly helpful.

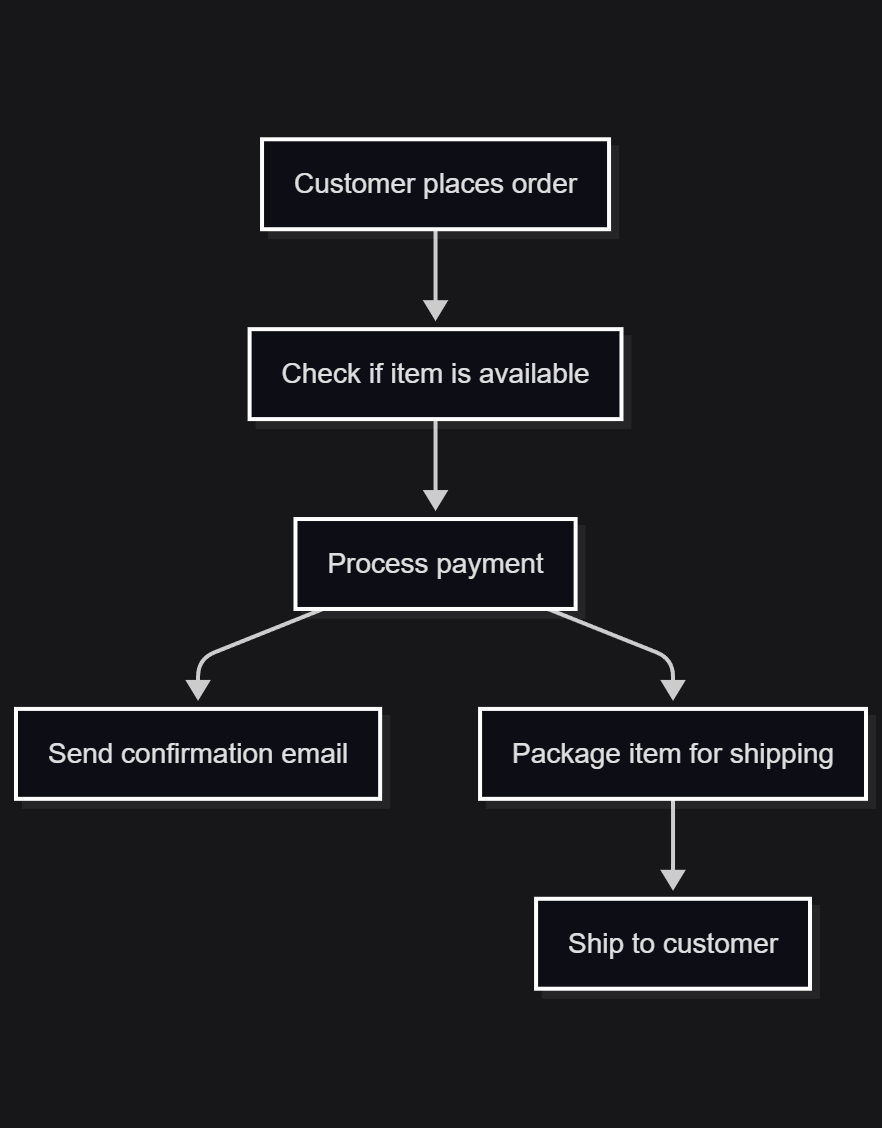

How Do You Draw Diagrams?

Sometimes, I need to create diagrams for documentation, system design interviews or to explain complex ideas.

I use markup languages like Mermaid for describing diagrams. Writing diagrams as code means I can version control them, generate them programmatically, and most importantly, read the source to understand them.

graph TD

A[Customer places order] --> B[Check if item is available]

B --> C[Process payment]

C --> D[Send confirmation email]

C --> E[Package item for shipping]

E --> F[Ship to customer]

If I need to understand a programmatically generated diagram, I read its source when possible. That is usually faster than parsing it visually.

This approach has an unexpected benefit: diagrams as code encourages thinking about the logical structure rather than visual aesthetics. The result is often clearer and more maintainable.

I’ve found that LLMs work well for generating Mermaid diagrams from natural language descriptions.

How Do You Read Screen Shares?

Like images, a screen share is just a stream of pixels with no semantic meaning. Hence, there is nothing for a screen reader to verbalize.

I work around the issue by following along on my computer where possible. For example:

- Getting the materials for a presentation and having it open to the speaker’s current slide

- If screen sharing a web page like internal documentation or story tickets, I have the same page open on my computer

- If someone is walking through code, verbalizing the file name and current line when navigating to a new file or section of code allows me to follow along on my machine

Do You Do Pair Programming?

I usually prefer not to. When I do, I use VS Code’s Live Share feature or share my screen for the other person to drive.

Pair programming is mentally exhausting for me in ways that solo development isn’t. There’s significant cognitive overhead when I’m having a conversation, maintaining shared context about where we are in the codebase and listening to my screen reader simultaneously since all this is done by ear.

Other forms of collaboration like code reviews, design discussions and debugging sessions work well for me. It’s the real-time, same-screen aspect of traditional pair programming that creates friction.

The Single-Channel Challenge

Just like you can’t easily follow two people speaking at once, I can’t listen to my screen reader and someone else speaking simultaneously - they’re both competing for the same audio channel.

In these situations, I use my computer during natural pauses in the conversation or presentation. I might look back at something relevant to what was just said or navigate ahead to the section being discussed next.

If the speaker resumes talking while I’m still using my computer, I have to context switch between both audio streams. I can process both simultaneously, but only for short periods due to the intense focus required. There’s always a mental cost to switching back and forth - I might lose my place or miss something important. This was difficult at first, but like any skill, it gets easier with practice.

When the speaker relies on visual references like “this error here” or rapidly switches between screens without verbal cues, it is much harder for me to stay in sync. In these situations, I’ll ask for brief descriptions - “in the UserService class” - so I can follow along on my machine. It’s a small adjustment that makes a huge difference for me.

When materials for the discussion or presentation are available in advance, the process becomes much smoother. Being familiar with the content beforehand reduces the cognitive load significantly since I can read ahead of time. This also leads to more productive discussions, as I can come prepared with questions.

Learning at 800 WPM

My learning strategies take advantage of being able to read large amounts of text quickly. However, what I said earlier about slowing down when the cognitive load is higher still applies, so my ability to understand the content becomes the bottleneck.

I learn primarily through text-based resources. Well-structured documentation with headings and lists allows me to navigate and skim for relevant information quickly with keyboard shortcuts, much like scanning a page visually. Good semantic structure improves readability for everyone.

I use e-books for depth and online documentation or text-based tutorials otherwise. LLMs are also very helpful for content personalized to my learning needs. Images without meaningful alt text can be an issue though.

For video content like conference talks, I read transcripts when available. Otherwise, I increase the playback speed to 2x. It is much harder to understand human speech at higher playback rates, since it is not uniform like synthetic speech.

Conclusion

Most development content online about screen readers focus on how to make applications accessible. There’s much less written about screen reader users as participants in the development process itself. I get asked the same questions repeatedly: “How do you actually write code?” “What tools can you use?” “Do you do pair programming?” I wrote this post to answer these questions. If you’ve ever been curious about how development works from this perspective, I hope you’ve found this useful.

I also wanted to talk about the importance of accessibility in developer tooling and software in general. Every day, I experience the direct consequences of decisions made by other developers. When someone chooses semantic HTML over div soup or implements support for proper keyboard navigation, it determines how easily I can do my job. These aren’t abstract accessibility guidelines - they’re the difference between productive collaboration, wasting time on workarounds or being unable to use an application entirely.

Despite the challenges I’ve outlined, I want to end on a positive note. Software development has been incredibly fulfilling for me. Everyone has their own obstacles to navigate. Mine happen to involve using a screen reader and overcoming accessibility barriers.

The field isn’t perfect, but compared to many other careers, software development is remarkably adaptable to different ways of working. As long as you can think through problems and translate ideas into code, there’s a place for you here.

Discuss this post on Hacker News.

Footnotes

It may be theoretically possible to develop mobile apps for Android on iOS without using Android Studio or xCode, but this would be difficult without using cross-platform alternatives like React Native. I would love to be proven wrong though. ↩